PySpark Overview — PySpark 4.0.1 documentation - Apache Spark

PySpark combines Python’s learnability and ease of use with the power of Apache Spark to enable processing and analysis of data at any size for everyone familiar with Python.

Cluster Mode Overview - Spark 4.0.1 Documentation

There are several useful things to note about this architecture: Each application gets its own executor processes, which stay up for the duration of the whole application and run tasks in …

Overview - Spark 4.0.1 Documentation

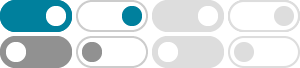

Spark Connect is a new client-server architecture introduced in Spark 3.4 that decouples Spark client applications and allows remote connectivity to Spark clusters.

Application Development with Spark Connect - Spark 4.0.1 …

Spark Connect is available and supports PySpark and Scala applications. We will walk through how to run an Apache Spark server with Spark Connect and connect to it from a client …

Getting Started — PySpark 4.0.1 documentation - Apache Spark

This page summarizes the basic steps required to setup and get started with PySpark. There are more guides shared with other languages such as Quick Start in Programming Guides at the …

RDD Programming Guide - Spark 4.0.1 Documentation

To run Spark applications in Python without pip installing PySpark, use the bin/spark-submit script located in the Spark directory. This script will load Spark’s Java/Scala libraries and allow you …

Apache Spark™ - Unified Engine for large-scale data analytics

Install with 'pip' $ pip install pyspark $ pyspark Use the official Docker image $ docker run -it --rm spark:python3 /opt/spark/bin/pyspark QuickStart Machine Learning Analytics & Data Science

Spark Connect Overview - Spark 4.0.1 Documentation

What is supported PySpark: Since Spark 3.4, Spark Connect supports most PySpark APIs, including DataFrame, Functions, and Column. However, some APIs such as SparkContext …

Spark Streaming - Spark 4.0.1 Documentation

Note Spark Streaming is the previous generation of Spark’s streaming engine. There are no longer updates to Spark Streaming and it’s a legacy project. There is a newer and easier to …

Spark SQL — PySpark 4.0.1 documentation

pyspark.sql.tvf.TableValuedFunction.posexplode_outer pyspark.sql.tvf.TableValuedFunction.variant_explode …